- whether your process was agile to start with

- whether "more effective" maps to "more agile"

The first point started me thinking whether I could design a thought experiment by using Little's Law on that anathema of all non-agile processes - Waterfall! (Just say "no" I hear +Karl Scotland say. :-) More on that below, but let's deal with the second point first.

Agility is the ability to change direction quickly in response to changing circumstances. A few businesses operate in very stable conditions and can optimise their processes to just these conditions. For them increasing throughput is usually the highest priority - agility may not be high on their priority list. However most of us working in knowledge-based disciplines find that the needs of our stakeholders change very rapidly. The ability to track this moving target and deliver new ideas faster is the key to being "more effective". For us agility does map to effectiveness.

So if we've decided being more agile is desirable in our context, how to go about it? Here are two possible approaches:

- Look for a checklist of "agile practices" and adopt them (you may need a consultant who's cleverer than me to advise you on which ones must be adopted together and in which order!)

- Look for a way to measure your "agility" then see if these measures improve as you make incremental changes to your current practices.

There is value in both approaches of course, since they are not mutually exclusive. However I know I am not alone in seeing failures in agile adoption initiatives where dependent practices are introduced in the wrong order (for example Scrum for team organisation, without automated build and test for regression testing).

The second approach relies on having some measures of agility. Here are three from a recent David Anderson blog. They are cadences all measured in units of time:

- how often can an organization interact with its customers to make selection and prioritization decisions about what to work on next? (queue replenishment period)

- how quickly can the work be completed from making a commitment to do it? (lead time)

- how often can new completed work be delivered and operational? (release period)

Lead Time = WIP / Delivery Rate, where

Lead Time is the time a work item spends in the process (TIP or time in process is an alternative term)

Delivery Rate is the number of items delivered per unit of time (also called Throughput)

WIP is the number of work items are in the process at any point in time.

The initial waterfall(-ish) process

Our initial scenario is a product development company releasing a new version of a product roughly every 6 months, say 120 working days. It's not as extreme as some waterfall projects where no customer deliveries are made until years after the requirements are defined, but nor is it very agile.

Let's say this project releases 24 new "Features" (independently releasable functionality) which are selected at the start of the analysis phase for each 6-monthly release. Each Feature can be further broken down to around 10 User Stories. (Or whatever the waterfall equivalent is! Development tasks say.) There are 3 stages in our (very simplified) process: Analysis, Development, and Release. Testing is included in the Development phase (something they must have learned from a passing agile team!).

- Analysis of an Feature takes a Business Analyst an average of 20 working days. The team has 4 Analysts so 24 Features will take 120 working days to specify.

- Development of a User Story takes 2 developers (possibly a Programmer and a Tester) 5 elapsed days to complete. With a team of 20 developers, this phase will take around 120 working days to complete the 240 stories.

- Release typically takes 10 days elapsed regardless of these size of the release. We assume however that development and analysis work may continue in parallel with this phase.

- queue replenishment period: this is around 120 working days. When a release comes out and the development team start on the next release, the analysts start on the release after that, so its 120 days between each of these requirement selection meetings.

- lead time: from selection of the 24 Features, through their analysis (120), development (120) and release (10), the wait is 250 working days

- release cadence: this is the 120 working days between releases

The average Delivery Rate for this process is 0.2 Features per working day.

Let's compare this with the ideal continuous process assuming the same productivity as above but releasing as frequently as possible. Now such a change cannot be made immediately - it's a major mistake to increase the frequency of releases without improving automated build and test for example. It's more important to select smaller incremental steps where the risk of breaking the system is lower but effectiveness (including agility) can be shown to improve. But for the purpose of our thought experiment let's jump forward, assuming the basic numbers are the same, but we've reached a stage where the process can run continuously. Let's also assume the 4 analysts working together could deliver one Feature spec in 5 days rather than the 20 taken by one lone analyst. |

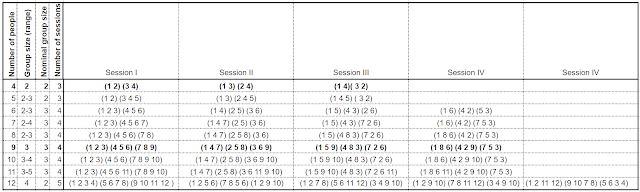

| WIP Limits for the New Process |

- queue replenishment cadence: as a new Feature is started every 5 days, we can select Features one at a time every 5 days (down from 120). If the business selected 2 at a time the period would be 10 days.

- lead time: from selection of each Features, through its analysis (5), development (20) and release (10) the wait is 35 working days (down from 250!)

- release cadence: this is now down to 10 working days between releases (down from 120). This is the minimum without addressing the release process itself - an obvious place to look for further improvement.

The average Delivery Rate is still 0.2 Features per working day (this is inherent in our assumptions above).

The conclusion from this experiment? Agility can be measured and it can be improved radically by changing batch processes into continuous ones (or making batches as small as possible). Agile practices are needed to enable effectiveness and improved productivity at low batch sizes, but the pay-off is seen in the reduction in the times between queue replenishments and releases... and most especially in the reduction in lead time.

Footnote on Little's Law calculations:

The improvements in the 3 agile cadences in this thought experiment are based simply on adding up the times suggested by our scenario. We can derive these results using Little's Law instead, which is easier if the numbers don't drop out quite so easily as our simple scenario. First let's re-run the scenarios above.

Here's the initial waterfall process:

Average Delivery Rate = 0.2 Features per day

Average WIP = 24 Features in Analysis + 24 Features in Development + (24 in Release) * 10 days / 120 days

Thus Average WIP = 50 Features

This results in an Average Lead Time = 50 / 0.2 = 250 days

Here's the more continuous process:

Average Delivery Rate = 0.2 Features per day (still)

Average WIP = 1 Feature in Analysis + 4 Features in Development + 2 in Release

Thus Average WIP = 7 Features

This results in an Average Lead Time = 7 / 0.2 = 35 days

What would be the impact if we could reduce the 10 days of the release process down to 1 day (maybe by introducing techniques from Continuous Delivery). What would the new Lead Time be? Is it just 9 days less? Let's see.

With a 1 day release process, we can deliver Features one by one with a release on average every 2.5 days. So the figures become:

Average Delivery Rate = 0.2 Features per day (still our assumption)

Average WIP = 1 Feature in Analysis + 4 Features in Development + (1 in Release) * 1 day / 2.5 days

Thus Average WIP = 5.4 Features

This results in an Average Lead Time = 5.4 / 0.2 = 27 days

The reduction of 8 rather than 9 days is not easy to derive intuitively, proving the worth of Little's Law to continue the thought experiment and gauge the impact of improvements to other aspects of the process.

Why not try a few scenarios yourself?

No comments:

Post a Comment