Burning down user stories sprint by sprint at a nice constant velocity is what we hope to be seeing on agile projects. What happens though if there are defects in the work we've delivered and we have to start planning the defect fixing as well as the new work? How should this situation be approached?

Firstly, don't deliver defects! Easy to say but we know that all teams deliver defects at some points. If you start getting a lot of defects back though it's a pretty sure sign that you have a false velocity - you're reporting work as done when it isn't really. It simply hasn't been tested enough. So the second point is that you have to face reality about the velocity of your team. Get the testing done and automated before you deliver the next set of stories.

All well and good but what about planning the next few sprints? How do we plan reliably now when we are not sure how much defect fixing we're going to have to do along side the new user stories. Here's one approach that seems to work... 2 velocities!

The overall velocity of the team is how many "points" the team burns down in a sprint. We want to ensure this is pretty constant, always assuming that the team stays constant and we're not carrying over too much work in progress at sprint boundaries (see here for a previous discussion of that problem). The second important velocity though is "effective velocity" - the amount of new client-required work that is burned down per sprint. This excludes work on defects (which means the higher velocity reported in sprints when you delivered the defects is balanced) and work on refactoring and process improvement (necessary but not what the client is paying for). You can see the effective velocity sprint by sprint by creating a new folder each sprint to contain just the new work completed/targeted).

Giving the team visibility of these metrics is important so they can see the impact of defects and also appreciate improvements when the effective velocity is restired.

The Improving Projects blog from Huge IO (UK & Ireland) is primarily about products, organisations and projects... and how to improve them. As well as musings on agile processes, software engineering in general, and methods like Kanban and Scrum, there's advice here too for users of process planning, execution and improvement tools - and the metrics they can provide. https://uk.huge.io

Subscribe to:

Post Comments (Atom)

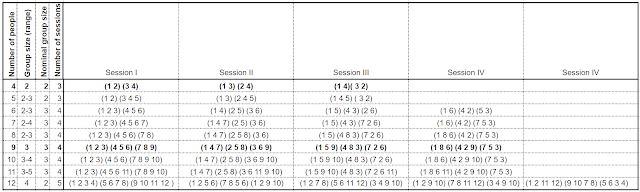

Breakout sessions that ensure everyone in the meeting meets everyone else

Lockdown finds us doing more and more in online meetings, whether it's business, training, parties or families. It also finds us spendin...

-

Cost of Delay (CoD) is a vital concept to understand in product development. It should be a guide to the ordering of work items, even if - ...

-

When starting to use xProcess there are a number of terms that may be unfamiliar. What for example is an "overhead" task? In gener...

-

Ron Lichty is well known in the Software Engineering community on the West Coast as a practitioner, as a seasoned project manager of many su...

No comments:

Post a Comment